What Types of AI Glasses Are Available on the Market Today?

What Types of AI Glasses Are Available on the Market Today?

AI glasses currently come in three main forms:

Displayless AI GlassesThese glasses focus on integrating AI technology into eyewear, emphasizing practical applications. They offer features like voice assistants, navigation, translation, health monitoring, and photography. Typically lightweight and sleek in design, they resemble regular glasses for comfortable, all-day wear. For example, the Meta Ray-Ban AI glasses weigh just 49 grams—almost indistinguishable from traditional frames—making them ideal for daily use.

Monochrome AR + AI GlassesThese integrate core components like motherboards, computing platforms, and optical display modules into a traditional glasses form factor. Users can activate an AI assistant via voice commands and view monochrome (usually green) text and symbols on the lens.

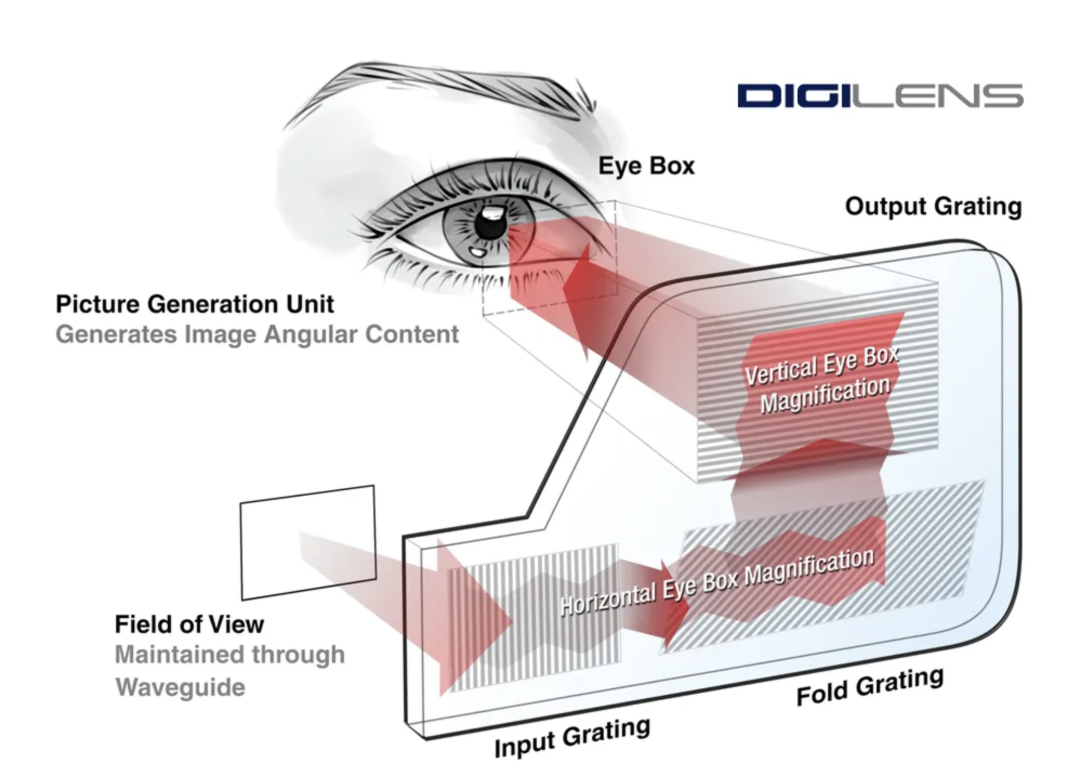

Full-Color AR + AI GlassesPrimarily AR-focused with added AI capabilities, these glasses employ various optical display solutions. For instance, RayNeo X2 by Thunderbird uses MicroLED + diffractive waveguide technology, while Meta’s conceptual "Orion" prototype adopts an innovative MicroLED + etched diffraction waveguide display. These glasses deliver high resolution, vibrant colors, and seamless integration with the real world, offering a more immersive visual experience.

Why Haven’t AI Glasses Truly Entered Our Lives Yet?

Despite these options, AI glasses haven’t become mainstream. The reason is simple: they aren’t truly "AI" yet—or at least, they’re far from being a functional extension of smartphones.

Let’s break this down from two key perspectives:

1. Low Information Density

Information absorption follows this hierarchy (from highest to lowest density):Video > Images > Text > Sound > Smell/Taste

Displayless AI Glasses rely solely on audio output, which is inefficient.

Average speech comprehension: 120–150 words per minute (WPM).

Average reading speed: 400–600 WPM—3.3–4x more efficient than listening.

Monochrome AR + AI Glasses are only marginally better.

Their display area is limited by field of view (FOV), which rarely exceeds 50 degrees in current models.

FOV depends on the waveguide’s refractive index, which is constrained by materials (e.g., glass or silicon carbide in Meta’s "Orion").

Expanding FOV requires advancements in waveguide technology and materials science.

Additionally, adding a display (monochrome or full-color) increases power consumption and weight, forcing manufacturers to balance:✔ Information density✔ Battery life✔ Comfort

Until battery tech improves, this remains an unsolved challenge.

2. AI Models Aren’t Smart Enough

Current AI models (like ChatGPT) are trained on internet data and generate responses based on patterns—not true understanding. They lack:

Intent recognition (they don’t grasp the why behind questions).

Emotional intelligence (no genuine awareness or decision-making).

For AI glasses to become indispensable, they must evolve beyond simple Q&A into context-aware, proactive assistants.

The Future of AI Glasses

While early adopters may embrace them, mass adoption hinges on solving:🔹 Hardware limitations (FOV, battery life, comfort).🔹 AI’s real-world utility (moving beyond chatbots to true intelligence).

Only then will AI glasses transition from a novelty to a necessity.

Thoughts? Would you buy AI glasses in their current state, or are you waiting for major upgrades? Let’s discuss in the comments!